模型:

pierreguillou/lilt-xlm-roberta-base-finetuned-with-DocLayNet-base-at-paragraphlevel-ml512

中文

中文Document Understanding model (finetuned LiLT base at paragraph level on DocLayNet base)

This model is a fine-tuned version of nielsr/lilt-xlm-roberta-base with the DocLayNet base dataset. It achieves the following results on the evaluation set:

- Loss: 0.4104

- Precision: 0.8634

- Recall: 0.8634

- F1: 0.8634

- Token Accuracy: 0.8634

- Paragraph Accuracy: 0.6815

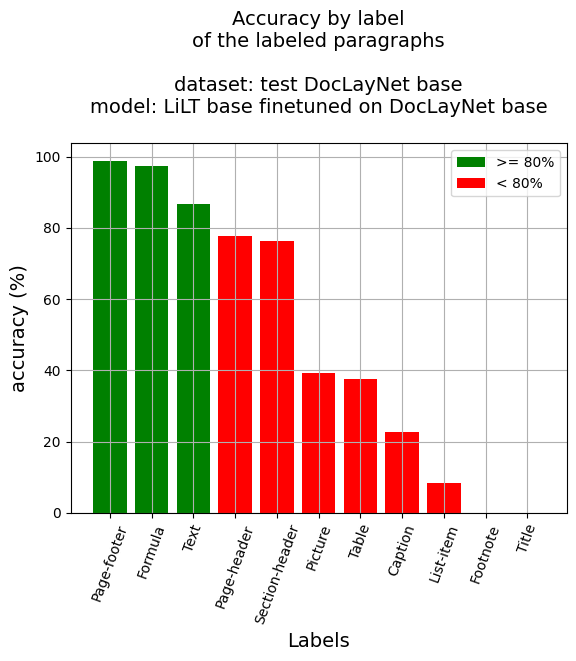

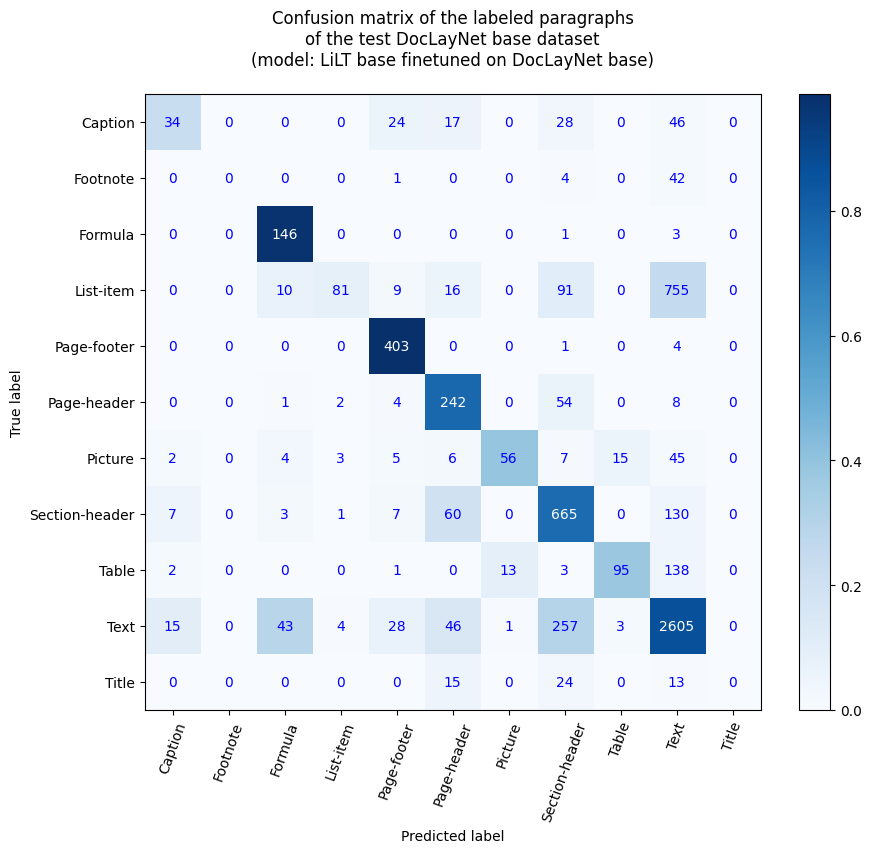

Accuracy at paragraph level

- Paragraph Accuracy: 68.15%

-

Accuracy by label

- Caption: 22.82%

- Footnote: 0.0%

- Formula: 97.33%

- List-item: 8.42%

- Page-footer: 98.77%

- Page-header: 77.81%

- Picture: 39.16%

- Section-header: 76.17%

- Table: 37.7%

- Text: 86.78%

- Title: 0.0%

References

Blog posts

- Layout XLM base

-

LiLT base

- (02/16/2023) Document AI | Inference APP and fine-tuning notebook for Document Understanding at paragraph level

- (02/14/2023) Document AI | Inference APP for Document Understanding at line level

- (02/10/2023) Document AI | Document Understanding model at line level with LiLT, Tesseract and DocLayNet dataset

- (01/31/2023) Document AI | DocLayNet image viewer APP

- (01/27/2023) Document AI | Processing of DocLayNet dataset to be used by layout models of the Hugging Face hub (finetuning, inference)

Notebooks (paragraph level)

-

LiLT base

- Document AI | Inference APP at paragraph level with a Document Understanding model (LiLT fine-tuned on DocLayNet dataset)

- Document AI | Inference at paragraph level with a Document Understanding model (LiLT fine-tuned on DocLayNet dataset)

- Document AI | Fine-tune LiLT on DocLayNet base in any language at paragraph level (chunk of 512 tokens with overlap)

Notebooks (line level)

-

Layout XLM base

- Document AI | Inference at line level with a Document Understanding model (LayoutXLM base fine-tuned on DocLayNet dataset)

- Document AI | Inference APP at line level with a Document Understanding model (LayoutXLM base fine-tuned on DocLayNet base dataset)

- Document AI | Fine-tune LayoutXLM base on DocLayNet base in any language at line level (chunk of 384 tokens with overlap)

-

LiLT base

- Document AI | Inference at line level with a Document Understanding model (LiLT fine-tuned on DocLayNet dataset)

- Document AI | Inference APP at line level with a Document Understanding model (LiLT fine-tuned on DocLayNet dataset)

- Document AI | Fine-tune LiLT on DocLayNet base in any language at line level (chunk of 384 tokens with overlap)

- DocLayNet image viewer APP

- Processing of DocLayNet dataset to be used by layout models of the Hugging Face hub (finetuning, inference)

APP

You can test this model with this APP in Hugging Face Spaces: Inference APP for Document Understanding at paragraph level (v1) .

You can run as well the corresponding notebook: Document AI | Inference APP at paragraph level with a Document Understanding model (LiLT fine-tuned on DocLayNet dataset)

DocLayNet dataset

DocLayNet dataset (IBM) provides page-by-page layout segmentation ground-truth using bounding-boxes for 11 distinct class labels on 80863 unique pages from 6 document categories.

Until today, the dataset can be downloaded through direct links or as a dataset from Hugging Face datasets:

- direct links: doclaynet_core.zip (28 GiB), doclaynet_extra.zip (7.5 GiB)

- Hugging Face dataset library: dataset DocLayNet

Paper: DocLayNet: A Large Human-Annotated Dataset for Document-Layout Analysis (06/02/2022)

Model description

The model was finetuned at paragraph level on chunk of 512 tokens with overlap of 128 tokens . Thus, the model was trained with all layout and text data of all pages of the dataset.

At inference time, a calculation of best probabilities give the label to each paragraph bounding boxes.

Inference

See notebook: Document AI | Inference at paragraph level with a Document Understanding model (LiLT fine-tuned on DocLayNet dataset)

Training and evaluation data

See notebook: Document AI | Fine-tune LiLT on DocLayNet base in any language at paragraph level (chunk of 512 tokens with overlap)

Training procedure

Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 8

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 1

- mixed_precision_training: Native AMP

Training results

| Training Loss | Epoch | Step | Validation Loss | Precision | Recall | F1 | Accuracy |

|---|---|---|---|---|---|---|---|

| No log | 0.05 | 100 | 0.9875 | 0.6585 | 0.6585 | 0.6585 | 0.6585 |

| No log | 0.11 | 200 | 0.7886 | 0.7551 | 0.7551 | 0.7551 | 0.7551 |

| No log | 0.16 | 300 | 0.5894 | 0.8248 | 0.8248 | 0.8248 | 0.8248 |

| No log | 0.21 | 400 | 0.4794 | 0.8396 | 0.8396 | 0.8396 | 0.8396 |

| 0.7446 | 0.27 | 500 | 0.3993 | 0.8703 | 0.8703 | 0.8703 | 0.8703 |

| 0.7446 | 0.32 | 600 | 0.3631 | 0.8857 | 0.8857 | 0.8857 | 0.8857 |

| 0.7446 | 0.37 | 700 | 0.4096 | 0.8630 | 0.8630 | 0.8630 | 0.8630 |

| 0.7446 | 0.43 | 800 | 0.4492 | 0.8528 | 0.8528 | 0.8528 | 0.8528 |

| 0.7446 | 0.48 | 900 | 0.3839 | 0.8834 | 0.8834 | 0.8834 | 0.8834 |

| 0.4464 | 0.53 | 1000 | 0.4365 | 0.8498 | 0.8498 | 0.8498 | 0.8498 |

| 0.4464 | 0.59 | 1100 | 0.3616 | 0.8812 | 0.8812 | 0.8812 | 0.8812 |

| 0.4464 | 0.64 | 1200 | 0.3949 | 0.8796 | 0.8796 | 0.8796 | 0.8796 |

| 0.4464 | 0.69 | 1300 | 0.4184 | 0.8613 | 0.8613 | 0.8613 | 0.8613 |

| 0.4464 | 0.75 | 1400 | 0.4130 | 0.8743 | 0.8743 | 0.8743 | 0.8743 |

| 0.3672 | 0.8 | 1500 | 0.4535 | 0.8289 | 0.8289 | 0.8289 | 0.8289 |

| 0.3672 | 0.85 | 1600 | 0.3681 | 0.8713 | 0.8713 | 0.8713 | 0.8713 |

| 0.3672 | 0.91 | 1700 | 0.3446 | 0.8857 | 0.8857 | 0.8857 | 0.8857 |

| 0.3672 | 0.96 | 1800 | 0.4104 | 0.8634 | 0.8634 | 0.8634 | 0.8634 |

Framework versions

- Transformers 4.26.1

- Pytorch 1.13.1+cu116

- Datasets 2.9.0

- Tokenizers 0.13.2

Other models

-

Line level

- Document Understanding model (finetuned LiLT base at line level on DocLayNet base) (accuracy | tokens: 85.84% - lines: 91.97%)

- Document Understanding model (finetuned LayoutXLM base at line level on DocLayNet base) (accuracy | tokens: 93.73% - lines: ...)

-

Paragraph level

- Document Understanding model (finetuned LiLT base at paragraph level on DocLayNet base) (accuracy | tokens: 86.34% - paragraphs: 68.15%)

- Document Understanding model (finetuned LayoutXLM base at paragraph level on DocLayNet base) (accuracy | tokens: 96.93% - paragraphs: 86.55%)