模型:

h2oai/h2ogpt-oasst1-512-12b

英文

英文h2oGPT Model Card

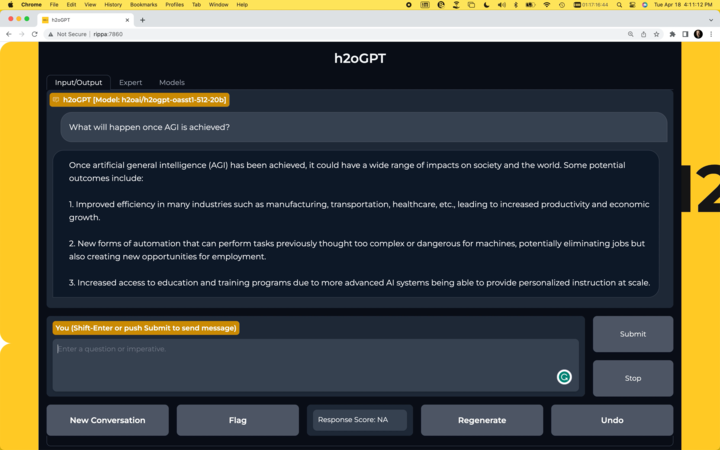

概述

H2O.ai的h2ogpt-oasst1-512-12b是一种拥有120亿参数的大型语言模型,可用于商业用途。

- 基础模型: EleutherAI/pythia-12b

- 微调数据集: h2oai/openassistant_oasst1_h2ogpt_graded

- 数据准备和微调代码: H2O.ai GitHub

- 训练日志: zip

Chatbot

- 运行自己的聊天机器人:

H2O.ai GitHub

使用

要在带有GPU的计算机上使用transformers库和accelerate库与模型一起使用,首先确保已安装这些库。

pip install transformers==4.28.1 pip install accelerate==0.18.0

import torch

from transformers import pipeline

generate_text = pipeline(model="h2oai/h2ogpt-oasst1-512-12b", torch_dtype=torch.bfloat16, trust_remote_code=True, device_map="auto", prompt_type='human_bot')

res = generate_text("Why is drinking water so healthy?", max_new_tokens=100)

print(res[0]["generated_text"])

或者,如果您不想使用trust_remote_code=True,可以下载 instruct_pipeline.py ,将其与笔记本放在一起,并从加载的模型和分词器构建自己的流水线:

import torch

from h2oai_pipeline import H2OTextGenerationPipeline

from transformers import AutoModelForCausalLM, AutoTokenizer

tokenizer = AutoTokenizer.from_pretrained("h2oai/h2ogpt-oasst1-512-12b", padding_side="left")

model = AutoModelForCausalLM.from_pretrained("h2oai/h2ogpt-oasst1-512-12b", torch_dtype=torch.bfloat16, device_map="auto")

generate_text = H2OTextGenerationPipeline(model=model, tokenizer=tokenizer, prompt_type='human_bot')

res = generate_text("Why is drinking water so healthy?", max_new_tokens=100)

print(res[0]["generated_text"])

模型架构

GPTNeoXForCausalLM(

(gpt_neox): GPTNeoXModel(

(embed_in): Embedding(50688, 5120)

(layers): ModuleList(

(0-35): 36 x GPTNeoXLayer(

(input_layernorm): LayerNorm((5120,), eps=1e-05, elementwise_affine=True)

(post_attention_layernorm): LayerNorm((5120,), eps=1e-05, elementwise_affine=True)

(attention): GPTNeoXAttention(

(rotary_emb): RotaryEmbedding()

(query_key_value): Linear(in_features=5120, out_features=15360, bias=True)

(dense): Linear(in_features=5120, out_features=5120, bias=True)

)

(mlp): GPTNeoXMLP(

(dense_h_to_4h): Linear(in_features=5120, out_features=20480, bias=True)

(dense_4h_to_h): Linear(in_features=20480, out_features=5120, bias=True)

(act): GELUActivation()

)

)

)

(final_layer_norm): LayerNorm((5120,), eps=1e-05, elementwise_affine=True)

)

(embed_out): Linear(in_features=5120, out_features=50688, bias=False)

)

模型配置

GPTNeoXConfig {

"_name_or_path": "h2oai/h2ogpt-oasst1-512-12b",

"architectures": [

"GPTNeoXForCausalLM"

],

"bos_token_id": 0,

"classifier_dropout": 0.1,

"custom_pipelines": {

"text-generation": {

"impl": "h2oai_pipeline.H2OTextGenerationPipeline",

"pt": "AutoModelForCausalLM"

}

},

"eos_token_id": 0,

"hidden_act": "gelu",

"hidden_size": 5120,

"initializer_range": 0.02,

"intermediate_size": 20480,

"layer_norm_eps": 1e-05,

"max_position_embeddings": 2048,

"model_type": "gpt_neox",

"num_attention_heads": 40,

"num_hidden_layers": 36,

"rotary_emb_base": 10000,

"rotary_pct": 0.25,

"tie_word_embeddings": false,

"torch_dtype": "float16",

"transformers_version": "4.30.0.dev0",

"use_cache": true,

"use_parallel_residual": true,

"vocab_size": 50688

}

模型验证

使用 EleutherAI lm-evaluation-harness 进行的模型验证结果。

| Task | Version | Metric | Value | Stderr | |

|---|---|---|---|---|---|

| arc_challenge | 0 | acc | 0.3157 | ± | 0.0136 |

| acc_norm | 0.3507 | ± | 0.0139 | ||

| arc_easy | 0 | acc | 0.6932 | ± | 0.0095 |

| acc_norm | 0.6225 | ± | 0.0099 | ||

| boolq | 1 | acc | 0.6685 | ± | 0.0082 |

| hellaswag | 0 | acc | 0.5140 | ± | 0.0050 |

| acc_norm | 0.6803 | ± | 0.0047 | ||

| openbookqa | 0 | acc | 0.2900 | ± | 0.0203 |

| acc_norm | 0.3740 | ± | 0.0217 | ||

| piqa | 0 | acc | 0.7682 | ± | 0.0098 |

| acc_norm | 0.7661 | ± | 0.0099 | ||

| winogrande | 0 | acc | 0.6369 | ± | 0.0135 |

免责声明

在使用本存储库提供的大型语言模型之前,请仔细阅读此免责声明。您使用该模型即表示您同意以下条款和条件。

- 偏见和冒犯性:该大型语言模型是在各种互联网文本数据上进行训练的,其中可能包含有偏见、种族主义、冒犯性或其他不适当的内容。通过使用该模型,您承认并接受生成的内容有时可能存在偏见,或产生具有冒犯性或不适当的内容。本存储库的开发人员不支持、不赞成、也不推广任何此类内容或观点。

- 限制:这个大型语言模型是一种基于AI的工具,而不是人类。它可能会产生错误的、毫无意义的或不相关的回应。用户有责任对生成的内容进行批判性评估,并自行决定是否使用。

- 自行承担风险:使用这个大型语言模型的用户必须承担由使用或滥用提供的模型所产生的任何后果的全部责任。本存储库的开发人员和贡献者不对使用或滥用提供的模型所造成的任何损害、损失或危害承担责任。

- 伦理考虑:鼓励用户负责和道义地使用大型语言模型。通过使用这个模型,您同意不将其用于宣扬仇恨言论、歧视、骚扰或任何形式的非法或有害活动。

- 报告问题:如果您遇到由大型语言模型生成的具有偏见、冒犯性或其他不适当内容,请通过提供的渠道向存储库维护者报告。您的反馈将有助于改进模型并减轻潜在问题。

- 此免责声明的更改:本存储库的开发人员保留随时修改或更新此免责声明的权利,无需事先通知。用户有责任定期查阅免责声明以了解任何更改。

通过使用此存储库提供的大型语言模型,您同意接受并遵守本免责声明中概述的条款和条件。如果您不同意本免责声明的任何部分,您应该避免使用该模型和由其生成的任何内容。