模型:

SenseTime/deformable-detr

英文

英文带有ResNet-50骨干网的Deformable DETR模型

Deformable DEtection TRansformer(DETR)模型在COCO 2017对象检测(118k个注释图像)上进行了端到端的训练。它是由朱等人在 Deformable DETR: Deformable Transformers for End-to-End Object Detection 论文中提出,并在 this repository 中首次发布。

免责声明:发布Deformable DETR的团队没有为这个模型编写模型卡,所以这个模型卡是由Hugging Face团队编写的。

模型描述

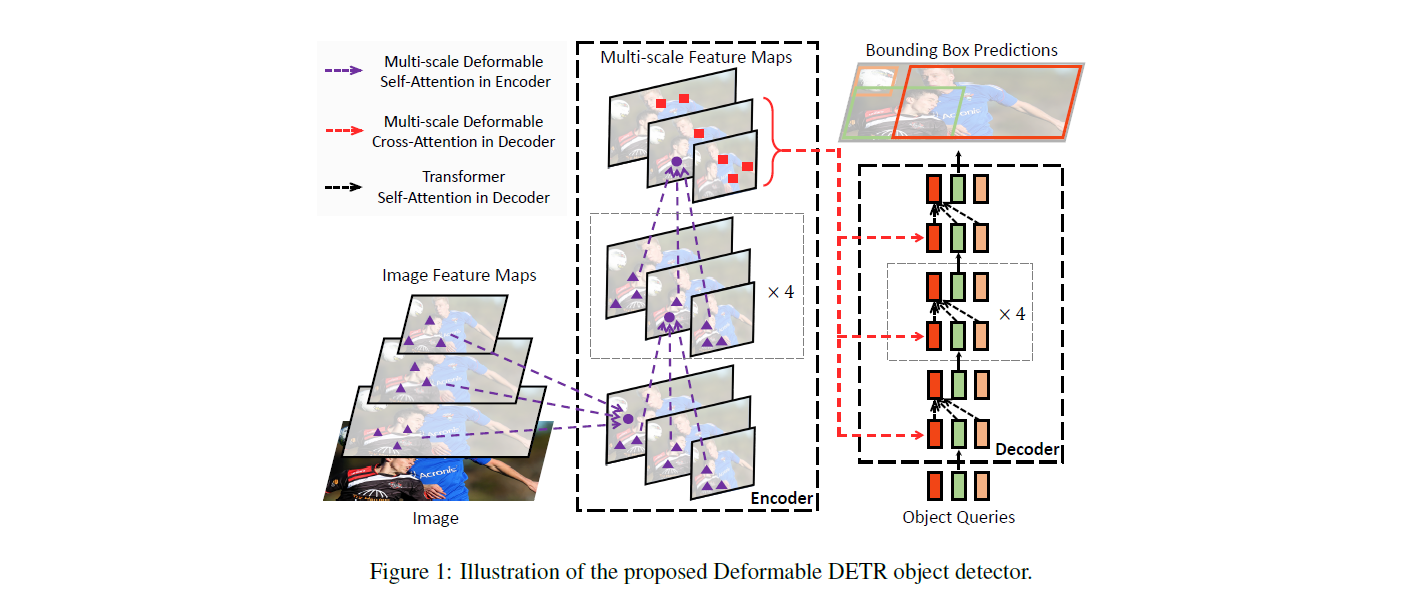

DETR模型是一个带有卷积骨干网的编码器-解码器变换器。在解码器输出的顶部添加了两个头,用于进行对象检测:一个用于类别标签的线性层和一个用于边界框的MLP(多层感知器)。该模型使用所谓的对象查询来检测图像中的对象。每个对象查询在图像中寻找一个特定的对象。对于COCO来说,对象查询的数量设为100。

该模型使用“二部图匹配损失”进行训练:将每个N = 100个对象查询的预测类别+边界框与地面实况注释进行比较,填充到相同长度N(因此,如果一张图像只包含4个对象,则96个注释将只具有“无对象”作为类别和“无边界框”作为边界框)。使用匈牙利匹配算法在N个查询和N个注释之间创建一个最佳一对一映射。接下来,使用标准交叉熵(用于类别)和L1损失和广义IoU损失(用于边界框)的线性组合来优化模型的参数。

预期的用途和限制

您可以使用原始模型进行对象检测。请参阅 model hub 以查找所有可用的Deformable DETR模型。

如何使用

以下是如何使用此模型的方法:

from transformers import AutoImageProcessor, DeformableDetrForObjectDetection

import torch

from PIL import Image

import requests

url = "http://images.cocodataset.org/val2017/000000039769.jpg"

image = Image.open(requests.get(url, stream=True).raw)

processor = AutoImageProcessor.from_pretrained("SenseTime/deformable-detr")

model = DeformableDetrForObjectDetection.from_pretrained("SenseTime/deformable-detr")

inputs = processor(images=image, return_tensors="pt")

outputs = model(**inputs)

# convert outputs (bounding boxes and class logits) to COCO API

# let's only keep detections with score > 0.7

target_sizes = torch.tensor([image.size[::-1]])

results = processor.post_process_object_detection(outputs, target_sizes=target_sizes, threshold=0.7)[0]

for score, label, box in zip(results["scores"], results["labels"], results["boxes"]):

box = [round(i, 2) for i in box.tolist()]

print(

f"Detected {model.config.id2label[label.item()]} with confidence "

f"{round(score.item(), 3)} at location {box}"

)

这应该会输出:

Detected cat with confidence 0.856 at location [342.19, 24.3, 640.02, 372.25] Detected remote with confidence 0.739 at location [40.79, 72.78, 176.76, 117.25] Detected cat with confidence 0.859 at location [16.5, 52.84, 318.25, 470.78]

目前,特征提取器和模型都支持PyTorch。

训练数据

Deformable DETR模型基于 COCO 2017 object detection 进行训练,该数据集包含118k/5k个注释图像用于训练/验证。

BibTeX条目和引用信息

@misc{https://doi.org/10.48550/arxiv.2010.04159,

doi = {10.48550/ARXIV.2010.04159},

url = {https://arxiv.org/abs/2010.04159},

author = {Zhu, Xizhou and Su, Weijie and Lu, Lewei and Li, Bin and Wang, Xiaogang and Dai, Jifeng},

keywords = {Computer Vision and Pattern Recognition (cs.CV), FOS: Computer and information sciences, FOS: Computer and information sciences},

title = {Deformable DETR: Deformable Transformers for End-to-End Object Detection},

publisher = {arXiv},

year = {2020},

copyright = {arXiv.org perpetual, non-exclusive license}

}